Hello, welcome to my blog. In my previous posts I introduced

the concept of classification. I talked about the operation of a linear

classifier, how to learn the coefficients for a linear classifier using

logistic regression and I demonstrated how to implement logistic regression in

Python.

In this post I want to talk about how we evaluate a

classifier. Concretely, how do we know

if a classifier is doing good or poorly on data? This question is the theme of

this post.

ACCURACY

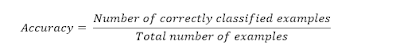

This is usually the first metric used to judge a classifier. It

is the fraction of predictions that the classifier got correct. Formally,

It has the following properties:

- Its best possible value is 1 – this means the classifier got all of its predictions correct

- Its worst possible value is 0 – this means the classifier got all of its predictions wrong

The opposite of accuracy is classification

error which is the fraction of predictions the classifier got wrong. The accuracy and classification error of any classifier on data must sum up to 1.

Accuracy is usually expressed in percentages. For example, if a classifier gets

85 out of 100 predictions correct it has an accuracy of 0.85 or 85%. A good

classifier will have accuracy close to 1 while a bad classifier will have

accuracy close to 0.

FLAWS OF ACCURACY

If a classifier has high accuracy it does not mean that you

should trust its predictions. Let me give an example. Suppose a classifier was

able to correctly identify whether or not 9,900 out of 10,000 reviews have

negative sentiment. This means the classifier has an accuracy of 99%. This may

seem very impressive but what if on further investigation it was discovered

that only 50 out of the 10,000 reviews had positive sentiment? Suddenly this

accuracy score is no longer impressive because if we had a ‘classifier’ that

predicted ŷ = -1 (i.e. negative sentiment) for every review, this ‘classifier’

would have an accuracy of 99.5% which is better than the previous classifier. Keep

in mind that this classifier does not learn anything from the data – it always

predicts ŷ = -1 for every review and it still manages to do better than the

previous classifier which went through the process of learning from data.

This is the major flaw of using accuracy to measure the

performance of a classifier. It can give misleading information on the

performance of a classifier especially in cases where the data is imbalanced

i.e. majority of examples belong to one class. This tells us that accuracy

alone is not good enough judge how well a classifier is doing. Before I discuss

other classification metrics, I want to talk about the confusion matrix.

CONFUSION MATRIX

A confusion matrix (also

called contingency table) is a way of displaying the predictions of a

classifier. It helps us see how well a classifier’s predictions match the

actual values in the data. For a binary classification problem, the table will

be a 2x2 matrix. More generally, for an n-class

classification problem (n stands for

the number of classes), the table will be an nxn matrix. The confusion

matrix for binary classification is shown below

Predicted Class

|

||||

ŷ = +1

|

ŷ = -1

|

|||

Actual

Class

|

y = +1

|

True Positives (TP)

|

False Negatives (FN)

|

|

y = -1

|

False Positives (FP)

|

True Negatives (TN)

|

||

A perfect classifier would have all its predictions on the

diagonal i.e. in the cells labelled True Positives and True Negatives. The

other cells describe the two kinds of error we can have. Let me define the

terms in the confusion matrix

- True Positives: These are reviews where the classifier predicted positive sentiment and the class of the review is truly positive.

- True Negatives: These are reviews where the classifier predicted negative sentiment and the class of the review is truly negative.

- False Positives: These are reviews where the classifier predicted positive sentiment but the actual class of the review is negative.

- False Negatives: These are reviews where the classifier predicted negative sentiment but the actual class of the review is positive.

OTHER CLASSIFICATION

METRICS – PRECISION & RECALL

PRECISION

Precision also known as positive

predictive value is defined as the fraction of positive predictions that

are truly positive. For the sentiment classification problem, precision asks –

What fraction of the reviews we predicted as having positive sentiment actually

had positive sentiment? Formally

If a classifier has high precision it means that if it makes a positive

prediction, it is very likely to be correct. Like accuracy, its best possible

value is 1 and its worst possible value is 0.

RECALL

Recall is defined as the fraction of positive examples that were

correctly predicted to be positive. It is a measure of how complete the

predictions are. For the sentiment classification problem, recall asks – What fraction

of the positive reviews did we correctly classify as having positive sentiment?

Formally

If a classifier has high recall it means it was able to identify

most (or all) of the actually positive examples. Like accuracy, its best

possible value is 1 and its worst possible value is 0.

PRECISION-RECALL EXTREMES

You can think of a model with high recall and low precision as

being optimistic – it predicts almost everything as positive. Therefore, it is

likely to capture all the positive reviews but it will most definitively

include a lot of negative reviews.

A model with high precision and low recall can be thought of as being

pessimistic – it only predicts positive when it is very sure. Therefore, if it

predicts that a review has positive sentiment it is very likely to be so but it

will also miss out on the positive reviews it was not sure about.

The question now is – Can we make a trade-off precision and

recall? Yes!

TRADING OFF PRECISION & RECALL

In a previous post, I said that the probability assigned to a

particular prediction is used to predict what class the prediction belongs to.

To make a high precision model, we increase the probability

threshold for classifying a review as positive. This will reduce the number of

reviews that are predicted as having positive sentiment. The reviews that are

predicted as having positive sentiment will be the ones we are very sure of.

To make a high recall model, we decrease the probability

threshold for classifying a review as positive. This increases the number of

reviews that are classified as positive. While we are likely to capture all the

positive reviews, many negative reviews will also be included in our predictions.

OTHER CLASSIFICATION METRICS

There are other metrics which can be used to judge the

performance of a classifier. I just list some of them here

- Sensitivity

- Specificity

- F1 Score

- Kappa statistic

SUMMARY

In this post, I talked about accuracy and its flaws when dealing

with imbalanced data. I also discussed precision and recall and how we balance

them to achieve either an optimistic or pessimistic classifier. I ended the

post my mentioning some other classification metrics.

Thank you once again for reading my blog and don’t forget to add

your email to the mailing list. Please leave a comment – it serves as feedback

which is good for this blog. Enjoy the rest of your weekend. Cheers!!!

No comments:

Post a Comment