Hello,

welcome to my blog. It’s been a while since my last post which is mostly due to

some personal projects I have being doing, laziness :) and other factors. Anyway, I want to introduce

another project I did for the Statistics with R specialization in this post. You

can see it by following this link.

Tuesday, 13 September 2016

Thursday, 4 August 2016

WATCHING THE OLYMPICS IN NIGERIA

Hello, welcome to my blog. This is the first of my articles from Jumia travel. Like I said in my previous post, I am in my kind of partnership with them so I will regularly post the articles they send to me. Hope you enjoy it.

Saturday, 30 July 2016

PREDICTING CRITICS AND AUDIENCE SCORES FOR MOVIES

Hello, welcome to my blog. I know it’s been long since my

last post – I apologize for that. I have been quite busy for the past few weeks

with some projects and I have not had any time to write. One of the things that

has kept me busy is some of the courses I have been taking on Coursera –

particularly the Statistics with R specialization.

In this post, I will present the project I did for one of the courses of this

specialization.

Sunday, 3 July 2016

AD OR NON-AD?

Hello, welcome to my blog. Apologies for the delay in writing

this post, I have been a little preoccupied lately. Thankfully I am able to

create time to write this post. In this post, I am going to address the problem

of distinguishing images that are ads from non-ads. Concretely, given an image the

goal is to determine if it’s an advertisement (“ad”) or not an advertisement

(“non-ad”). I am going to use the R programming language for this

demonstration.

Sunday, 12 June 2016

IMPLEMENTING ENSEMBLE METHODS WITH PYTHON

Hello, welcome to my blog. In my previous post I introduced

the concept of ensemble classifiers. I also talked about their operation and

two popular ensemble methods – Boosting & Random Forests.

In this post I want to demonstrate how to implement the two

ensemble methods mentioned above using the GraphLab library in Python. I will

use the same dataset – LendingClub dataset so we can compare the performance of

the single tree model to the ensemble model.

Monday, 30 May 2016

ENSEMBLE CLASSIFIERS

Hello, welcome to my blog. Recently, I have been talking

about two algorithms for classification namely logistic regression &

decision trees. I also demonstrated how we can implement these algorithms using

Python’s scikit-learn library.

Today, I want to talk about Ensemble classifiers. The fundamental

idea behind ensemble classifiers is combining a set of classifiers to make one

better classifier. Concretely, an ensemble classifier combines two or more

classifiers (also called a weak learner

or classifier) in order to make a stronger classifier (also called a strong learner or classifier).

Saturday, 21 May 2016

IMPLEMENTING DECISION TREES WITH PYTHON

Hello, welcome to my blog. In my previous post I introduced another

classification algorithm called decision trees. In this post I want to

demonstrate how to implement decision trees using the scikit-learn library in

Python.

Thursday, 12 May 2016

DECISION TREES

Hello, welcome to my blog. Recently, I have been talking

about classification. I have talked about a linear classifier, how to use

logistic regression to learn coefficients for a linear classifier. Furthermore,

I demonstrated how to implement logistic regression using the sci-kit learn

library in Python and I talked about evaluation metrics for classifiers.

Saturday, 30 April 2016

EVALUATION METRICS FOR CLASSIFIERS

Hello, welcome to my blog. In my previous posts I introduced

the concept of classification. I talked about the operation of a linear

classifier, how to learn the coefficients for a linear classifier using

logistic regression and I demonstrated how to implement logistic regression in

Python.

In this post I want to talk about how we evaluate a

classifier. Concretely, how do we know

if a classifier is doing good or poorly on data? This question is the theme of

this post.

Saturday, 23 April 2016

IMPLEMENTING LOGISTIC REGRESSION IN PYTHON

Hello

there. Welcome to my blog. In the last post I talked about the algorithm used

to learn coefficients for linear classifiers – logistic regression. In case you

have not read it, click here.

In this post, I want to demonstrate how to implement logistic

regression using the sci-kit learn library in Python. I will show the full

program at the end of the post, here I will just display the screenshots of the

results of the program.

Thursday, 14 April 2016

LOGISTIC REGRESSION

Hello, welcome to my blog. In my previous posts I introduced

the concept of classification and I also described the operation of a linear

classifier. Basically a linear classifier computes a score for an input and

classifies the input to a class based on the value of that score.

Monday, 4 April 2016

LINEAR CLASSIFIERS

Hello, welcome to my blog. In the previous post

I talked about classification and its popular applications in the real world. I

also listed the popular algorithms used for classification. Now I want to

introduce the concept of a linear classifier. For this post, I will use a restaurant

review system as a practical case study to make the concept of linear

classifiers clear.

Thursday, 31 March 2016

CLASSIFICATION

Hello, welcome to my blog. In this post I am going to talk

about another popular application of machine learning – classification. First,

let me define classification. It is the allocation (or organization) of items

into groups (or categories) according to type. In the context of machine

learning, classification is using the features of an item to predict what class

(out of a two or more classes) it belongs to. It is one of the fundamentals

tools of machine learning.

Friday, 25 March 2016

WORD CLOUD VISUALIZATION IN R

Hello,

welcome to my blog. In this post I want to demonstrate how to create a word

cloud using the R programming language. For more information on the R

programming language click here. A word cloud is an image

composed of words used in a particular text or subject, in which size of each

words indicates frequency or importance.

Friday, 18 March 2016

THE BIAS-VARIANCE TRADEOFF

Hello, welcome to my blog. In this post, I want to talk about

the Bias-Variance trade-off which is a very important topic in Machine

Learning. Before I do that let me lay the foundation. In my post on Linear

Regression, I said that the goal of a linear regression model is to find

parameters for our linear regression line that minimize the error between our

predictions and the actual observations.

Thursday, 10 March 2016

IMPLEMENTING NEAREST NEIGHBOURS IN PYTHON

Hello,

welcome to my blog. In the last post I introduced the concept of nearest

neighbours and how it can be used to for either prediction or classification.

In case you have not read it, you can read it here.

In

this post, I will show how to implement nearest neighbours in Python. This time

it will be a little different because I will use my own code instead of a

library (like I did in other posts). sklearn (a Python library) provides an

implementation of nearest neighbours but I think it better if I implemented it myself

so I can explain what is really happening in the program.

Monday, 29 February 2016

NEAREST NEIGHBOURS

Hello, welcome to my blog. In my previous posts I have talked

extensively about linear regression and how it can be implemented in Python.

Now, I want to talk about another popular technique in Machine Learning –

Nearest Neighbours.

Tuesday, 23 February 2016

POLYNOMIAL REGRESSION

Hello, welcome to my blog. I introduced the concept of linear

regression in my previous posts by giving the basic intuition behind it and showing

how it can be implemented in Python. In the last post, I gave a precaution to

observe when applying linear regression to a problem – Make sure the relationship between

the dependent and independent variable is LINEAR i.e. it can be fitted with a

straight line.

So, what do we do if a straight line cannot define the

relationship between the two variables we are working with? Polynomial regression helps to solve

this problem.

Sunday, 14 February 2016

LINEAR REGRESSION ROUNDUP

Hello, welcome to my blog. In my previous posts, I have been

talking about linear regression which is a technique used to find the

relationship between one or more explanatory variables (also called independent

variable) and a response variable (also called dependent variable) using a

straight line. Furthermore, I said that when we have more than one explanatory

variable it is called multiple linear

regression. Finally, I also implemented both types of regression using Python.

As a roundup I will just mention some precautions that should

be taken when applying linear regression. Here are some tips to remember:

Monday, 8 February 2016

IMPLEMENTING MULTIPLE LINEAR REGRESSION USING PYTHON

Hello, welcome to my blog. In this post I will introduce the

concept of multiple linear regression. First, let me do a brief recap. In the

last two posts, I introduced the concept of regression which basically is a

machine learning tool used to find the relationship between an explanatory (also called predictor, independent) variable and a response (or dependent) variable by modelling the relationship using the

equation of a line i.e.

y = a + bx

Where a is the

intercept, b is the slope and y is our prediction.

Up until now we have sort of used only one explanatory

variable to predict the response variable. This really is not very accurate

because if (for example) you are trying to predict the price of a house the

square footage of the house is not the only feature that determines it price.

Other attributes like number of bedrooms, bathrooms, location and many other

features will contribute to the final price of the house.

Sunday, 31 January 2016

IMPLEMENTING LINEAR REGRESSION USING PYTHON

Hello once again. Welcome to my blog. In the last post I

introduced linear regression which is a powerful tool used to find the

relationship between a response variable

and one or more explanatory variables.

In this post, I will demonstrate how to implement linear regression using a

popular programming language – Python. To perform linear regression in Python I

will make use of libraries. You can

think of them as plug-ins that are used to add extra functionality to Python.

The libraries I will be using are as follows:

i.

Pandas

(for loading data)

ii.

Numpy

(for arrays)

iii.

Statsmodels.api

& Statsmodels.formula.api (for linear regression)

iv.

Matplotlib

(for visualization)

For this demonstration, I will use the King

County House Sales data to predict the price (in dollars) of house using just one

feature – square footage of the house. This dataset contains information about

houses sold in King County (a region in Seattle). This dataset is public and

can be accessed by anyone (I think a Google search should provide a link to

where you can download it from). It’s in a CSV format (CSV stands for comma

separated values). To load the dataset we use the Pandas library. Once we have

loaded the dataset we can now use it to perform linear regression.Sunday, 24 January 2016

MACHINE LEARNING ALGORITHMS – LINEAR REGRESSION

Hello once again. How has your week been? Hope it has been

good. Thanks for visiting my blog once again. Today I would like to talk about

one of the most popular and useful machine learning algorithms – Linear

Regression.

First, what is regression? Regression basically describes the

relationship between numbers. For example, there is a relationship between

height (a number) and weight (another number). Generally, weight tends to

increase with height. Formally, regression is concerned with identifying the

relationship between a single numeric variable (called the dependent variable, response or

outcome) we are interested in and one

or more variables (called the independent

variable or predictors). If there is

only a single independent variable, this is called simple linear regression, otherwise it’s known as multiple linear regression.

What we assume in regression is that the relationship between

the independent variable and the dependent variable follows a straight line. It

models this relationship using the equation below:

y = a + bx

Where,

y – the dependent variable

a – intercept, this is the value of y when x = 0

b – slope, this is how much y changes for an increment in the

value of x

How Regression works

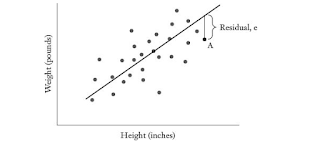

The goal of regression is to find a line that best fits our

data. Let me illustrate with the following scatterplot showing the relationship

between height (in inches) and weight (in pounds)

From the scatterplot, it can be seen that weight generally

increases with height and vice-versa. Now how do we find the line that best

fits this data? This is done by finding the line has the lowest sum of squared

residuals. Let me explain, the equation for y

shown above generates the predicted value for y which will differ from the actual value of y by some value (called residual

or error). This value is squared and

summed for all points in our data and a line that has the lowest sum of squared

errors is chosen. This is done by adjusting the values of a and b to values such

that they gives a line that fits our data. Let’s show the same data fitted with

the line of best of fit.

Although the

fitted line does not pass through each point in the data, it does a pretty good

job of capturing the trend in our data.

How to choose a and b

Earlier on I said we choose line with a and b such that it

gives the lowest sum of squared errors. How exactly do we do this? There are three

ways:

1. Ordinary least squares estimation.

2. Gradient descent.

3. The normal equation.

I won’t go in depth in describing this methods but a Google

search for any of these terms will give you more information if you are

interested in knowing more about them.

Congratulations!!! Now you know about linear regression one

of the most powerful tools in machine learning. In the next post, I will

demonstrate how to perform linear regression using a popular programming

language – Python. If you have a question please feel free to drop a comment.

Thanks once again for visiting my blog, hope you have a

wonderful and productive week ahead. Cheers.

Saturday, 16 January 2016

WHAT IS MACHINE LEARNING

WHAT IS MACHINE LEARNING?

Hello everyone! Happy new year to you all. Sorry for the delay in making this

post, just started NYSC for real and believe me it's quite stressful but there have fun times too (I guess). Anyway, enough chit-chat let's get to the

topic of the day - What is machine learning? This for me is a good place to

start for anyone who has an interest in any topic (not just machine learning).

What really is the thing I am interested in? That's the first question that I

feel should be clearly answered. The objective of the post is to briefly define

machine learning and give some of its popular applications.

According to Wikipedia, machine learning explores the study and

construction of algorithms that can learn to make predictions from data rather

than following static program instructions. Let me explain, machine learning

uses data to make predictions. These predictions could be anything from the saying

what the weather will be tomorrow, to classifying a handwritten digit, recognizing

a picture or predicting what the price of an item will be given features of

said item.

All of the tasks just mentioned would be difficult to achieve using rigid

programming rules. For example, a classic problem in machine learning is

classification of hand-written digits. Suppose we wanted to define what the

digit '7' should look like, how would we do that? This would be difficult to do

because people have different ways of writing the number '7'. Trying to write

rules to define what the digit '7' is (or isn't) to a program would be

difficult. In this case, the best option would be for the program to 'learn' the

various parameters required to correctly classify a digit. To do this, we would

collect samples of hand-written digits (data) which we would now feed to a

machine learning algorithm. The output of this algorithm can now be used to

classify digits.

Now that you know what machine learning is, let's look at some of its major

uses (if you feel there others, please feel free to add them in the comments

section). Machine learning is used mainly for prediction like I mentioned

earlier. This can be further classified into:

i.

Regression

ii.

Classification

In regression, we use numbers to predict numbers. Let me use the popular

example of trying to predict the price of a house. Assume we trying to predict

the price of a house and that we also features (also called attributes) of this

house e.g. square footage, number of bedrooms, number of bathrooms, the year it

was built and so on. The task is given all these features (which are basically

numbers) can predict how much this house will sell for? (another number).

Classification is more like regression – the only difference in this case

is that we are trying to predict a class. Another popular example for

classification is spam filtering where we use features of an email such the

words in the email, sender’s name, sender’s IP address etc. to predict if the

email is spam or not. This is called binary classification because we trying to

predict which of two classes an email

(or the item to be classified) belongs to. Sometimes, there may be more than

two classes. In this case it’s called multi-class classification. A good example

is classification of hand-written digits where we try to predict if a digit

belongs of 1 out of a possible 10 classes.

Another application of machine learning I would to mention is in the area

of products recommendation. This application is used by extensively by

companies such as Amazon (to recommend what shoppers may like to buy) and

Netflix (to recommend movies to users). Machine learning also finds application

in areas such as image recognition and classification where neural networks are

used to recognize and /or classify an image.

I hope this post has clearly explained what machine learning is and its

application. Please feel free to drop a comment about anything that is unclear

to you. Thanks for reading my blog. Hope to see you soon. Cheers!!!

Subscribe to:

Comments (Atom)